Perplexity AI

Overview

Perplexity is an AI search app perfect for summarizing the latest social media trends and posts. It searches across platforms like YouTube, Reddit, Twitter, LinkedIn, and provides references to posts made by others.

Perplexity AI is a Gen AI application tier company where modeling or tech stack capabilities are not a core value, and the ability to iterate on the product is an important feature.

On 8/12/22, Perplexity released a beta version of its search product Ask, which outputs GPT 3.5 answers validated with Bing search engine results based on user questions. Its earliest product was close to a search engine in that after entering text into the top dialog box, the following two-part content appeared:

- The first part is a summary of what the AI generated, with citations and an index;

- The second part is the source of the links referenced in the AI generation process, of which there will be three and only three. Feedback can be given below the generated content: like and dislike, or it can be retweeted to drive natural fission.

AI-driven "Swiss Army Knife" for information discovery and curiosity fulfillment

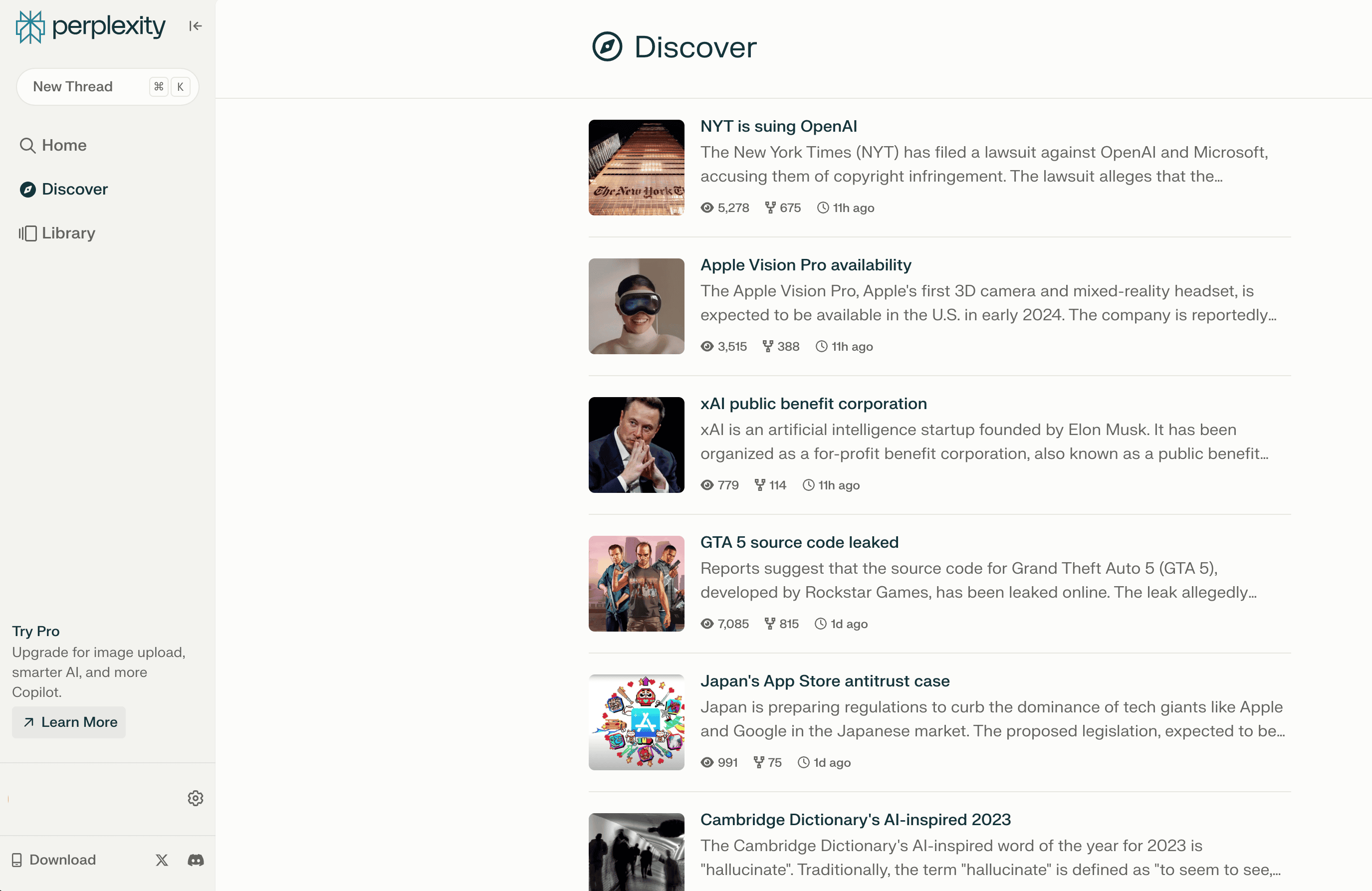

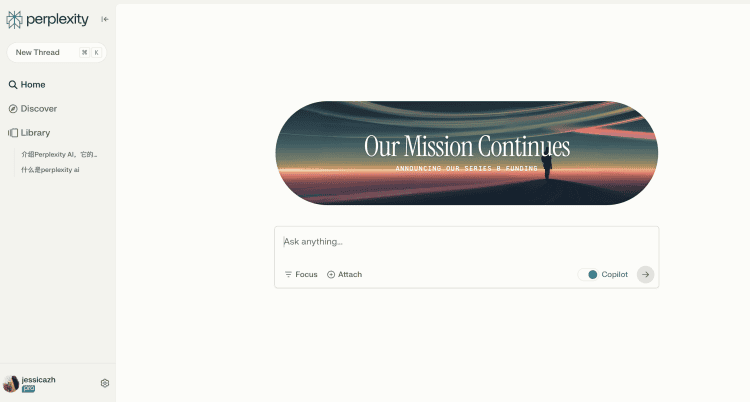

When you open the Perplexity page, the overall layout is very simple: the left column is the homepage "Home", "Discover" which displays the results of popular searches, and "Library" with your own history of questions, while the right main interface is a dialog box where you can enter questions.

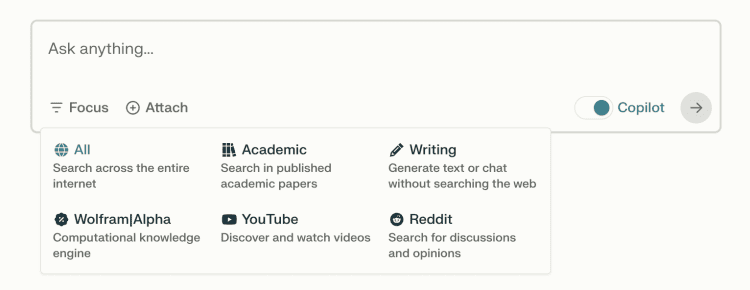

At a glance, it doesn't look much different from ChatGPT and Bard. Don't worry, the "Focus" at the bottom left corner of this small dialog box is the first highlight.

At a glance, it doesn't look much different from ChatGPT and Bard. Don't worry, the "Focus" at the bottom left corner of this small dialog box is the first highlight.

Tap it and you'll see a number of functional options: in addition to searching the entire web, you can also choose to specialize in academic searches for papers, get discussion comments from Reddit, discover and watch videos on YouTube, specifically refer to Wolfram Alpha, the computational knowledge engine, or simply generate text chats.

Users can avoid finding a needle in a haystack of useless information and get what they need faster and more accurately by customizing the scope of their searches, which is especially popular with academics and knowledge workers.

The 'Copilot' switch in the dialog box is a new research assistant feature introduced by Perplexity in May this year. When turned on, it enhances the interactive experience by providing personalized suggestions based on user input, optimizing search results, and providing further guidance.

Originally driven by GPT-4, this feature was optimized in August to default to a fine-tuned GPT-3.5, which greatly improves responsiveness without compromising inference.

As a product of combining traditional indexing with the inference and text transformation capabilities of large models, Perplexity works as follows: when a user enters a query, it understands and reconstructs the query, extracting the relevant links from the real-time index. Perplexity then gives the task of answering the user's query to the LLM, asking it to read all the links and extract the relevant paragraphs from each link to integrate the content, ultimately resulting in an accurate and comprehensive answer that supports backtracking and pursuing the query.